April 26 27 28 29 30, 2023

Dear colleague,

I appreciate all the concerned letters I got from folks after my recent blog post regarding ChatGPT and AI. For those of you worried that your colleague Dave has gone off and drank the Tech Bro Kool-Aid all of a sudden, let me do two things today:

- Assuage your fears by saying what I'm not saying when I say I was wrong about dismissing ChatGPT and its ilk.

- Explain a bit more about why I think tech like this is going to impact us.

And then tomorrow, I'll conclude the series by getting specific about what I think this is likely to change for us and what I think it's likely to leave untouched or deepened.

What I'm not saying when I'm saying I was wrong about dismissing ChatGPT and AI

I'm not writing this AI series because I'm sold on the tech-ification of learning or replacing teachers with AIs. It's the opposite of those two things, actually. What I think is that this technology is likely to just multiply our confusion as a society regarding what an education is for. And I think that this technology will only end up making teachers more precious, not less. At least, good teachers, folks invested in striking Drucker's balance in their classrooms with their students.

For the sake of total clarity, then, here are three things I want to be very explicit about so that you don't worry needlessly that I'm abandoning you or the pedagogy we've crafted together since I began blogging in 2012.

1) No matter how useful AIs like ChatGPT become, my classes will still primarily rely on the technology of 70-cent spiral notebooks. We'll take notes, we'll write short answer responses, we'll free-write, we'll reflect — by hand. Analog just has so many human benefits; in a world of AI, I think the unique beauty and productivity to be had with analog reading and writing will only increase. At the same time, digital reading and writing will offer even greater labor-saving conveniences than they do today. And so, just as today I sometimes have students submit their warm-ups on Canvas so that I can quickly see what they've all written in one place, I'm sure that as AI advances there will be cases where I'll pragmatically show students to use it. I'm not interested in teaching my students to take dishonest shortcuts in their writing — I wrote on cheating a few days ago to illustrate this — but I am interested in helping them leverage technology to become the best writers they can be.

To me, tech use in the classroom isn't either-or — it never has been.

At the same time, I expect to continue biasing my instruction toward analog reading and writing.

2) I'll still be all about helping my students to become better thinkers, readers, writers, speakers, and people. Now notice, I said “my students” — not, “my students and their devices.” While AI does look to me now to be a novel development in the history of human toolmaking, as far as my students and I are concerned, it follows this basic principle of tools: the quality of the tool's output depends on the quality of the user's input to the tool. E.g.,

- Give me a chainsaw, and I can chop a log; give an ice sculptor a chainsaw, and she can make a miracle.

Right now, AI is like that — it's a multiplier of the user's ability to think well with the tool.

And so, the only way to help my students think well that I'm aware of is the same as it's always been. They've got to know things, argue about things, read things, write things, and speak and listen about things. In other words: These 6 Things won't suddenly become outdated.

3) In a bit of an expansion of that last point: to help my students think well, they'll need to A) know lots and B) argue lots. I've long accepted what cognitive scientists have: getting a computer to produce knowledge is a Grand Canyon's chasm away from being able to think well with that knowledge. You've got to know things — lots of things — in order to comprehend what you read or hear, to argue well, to think clearly or deeply.

In short: longtime readers need not fear. Though my attention is very much on AI at the moment, it is not on it because I see some need to change my pedagogy.

So deep breath, colleague.

You and me? We're good.

But I've still not really answered the question, have I?

If I don't intend to jump on the ChatGPT bandwagon, why write this series of posts on AI?

Why AI has got my attention and why I think it will impact our work

In my last article, I shared a bit about my experiences with AI over the past three months; and ever since I wrote that I've added an addendum to the article on additional experiences that sort of blew my mind. If you've not scanned through that article, do.

But there are more reasons I'm interested in this topic now.

This topic reminds me of when I started out as a writer

My learning journey with the AI stuff lately has started reminding me of my earliest days as a teacher-writer. Back then, my blog was called Teaching the Core, and it was all about reading and understanding the Common Core State Standards.

I had no knowledge of the standards when I started writing in 2012 — no knowledge of any standards, really — but I was sensing it was time for me to go all in on figuring out what the CCSS said and what their implications were for my classroom.

My goal wasn't to be a “Common Core guy” — it was to be a teacher guy. I wanted to understand teaching better. I wanted to understand the national conversation. I wanted to get to the bottom of fundamental questions — questions that, when I started writing, I didn't even know I had.

And look — eleven years since I began writing, here's my take: the Common Core didn't turn out to be that big of a deal. Some states adopted them, many others adopted them with slight name changes, buzzwords spawned from them, fancy schmancy “next-gen” tests attempted to assess them. And probably the biggest effect of the Standards was that they advanced, mostly for the worst, this idea that good teaching focuses on skills and not knowledge. (Chapter 3 of These 6 Things goes into depth on why I think this is a problem.)

But at the end of the day, the national conversation has moved on.

Here's why AI isn't like that

AI today is similar to the Common Core in 2012 in a few ways. Namely, amongst teachers the topic of AI tends to generate:

- Lots of angst

- Lots of prognostication

- Lots of speculation

But AI is different from the Common Core in 2012 in some really important ways:

- It's not a PDF — I'll elaborate on that below.

- It's not single-sourced — meaning, AI isn't the baby of an innocuous organization called the Council of Chief State School Officers; instead, all of the largest and most powerful tech companies in the world are engaged in a breakneck arms race to develop more advanced forms of AI.

- It's not just freaking out educators — there's doomsday talk in all kinds of professions, including lawyers, coders, and doctors.

- It's not just about the USA — meaning, both the users and the developers of this technology span the globe.

It's not a PDF — a bit more on this

Here's a link to the Common Core State Standards for literacy. It's identical to the PDF I was linking to when I started writing articles about it in 2012, and it's identical to the PDF I used when writing my first book in 2014.

In eleven years, the document hasn't changed. The world has, but the document hasn't. It's stuck in time, like the mosquito in Jurassic Park.

AIs like ChatGPT are the total opposite of this. Their rate of change is literally exponential. A few facts and images to illustrate:

In 2012, when I was writing about Common Core, OpenAI [1] was four years from existing. The Common Core is history now. ChatGPT is a global conversation starter.

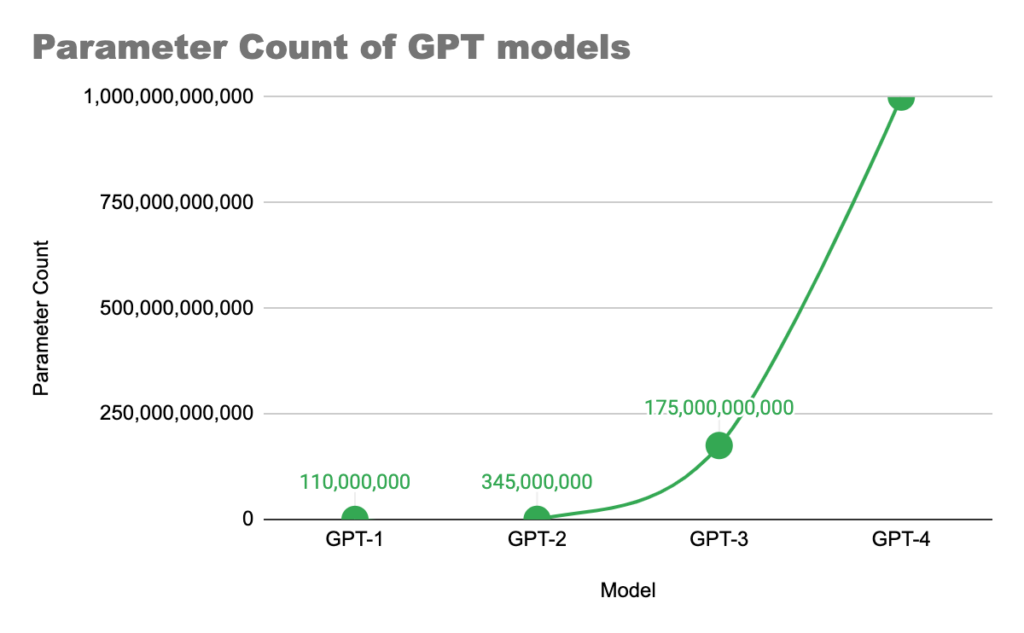

In 2018, OpenAI released its first version of GPT. [2] It had ~110 million parameters. (A ‘parameter' is like a computer version of a synapse in your brain. Synapses are the connections between neurons. To give you a comparison, your brain has about 86 billion neurons in it and roughly 100 trillion synapses.)

Take a look at what's happened to the parameter count of each model of GPT since the first one:

In short, AI has my attention because whether you're looking at parameter count or training data set sizes or companies involved or users of the technology, you're looking at hockey stick growth.

Tomorrow, I'll conclude this series on what I think you'll find a high note.

Best to you today, colleague,

DSJR

Footnotes:

- OpenAI is the company that owns ChatGPT.

- GPT = “generative pre-trained transformer;” it's the AI that you chat with through ChatGPT. Generative = it generates words. Pre-trained = it's trained on a “data set” (AKA millions and millions of web pages). Transformer = technical term for the kind of neural network it uses.

ChatGPT Online says

This post offers thoughtful reflection on ChatGPT and AI, with the author acknowledging his shift from doubt to interest. He makes salient points about carefully evaluating and understanding these emerging technologies before overstating their capabilities. While measured in tone, the piece highlights ChatGPT’s potential while emphasizing the need for human discernment. A balanced perspective on navigating innovations like AI.